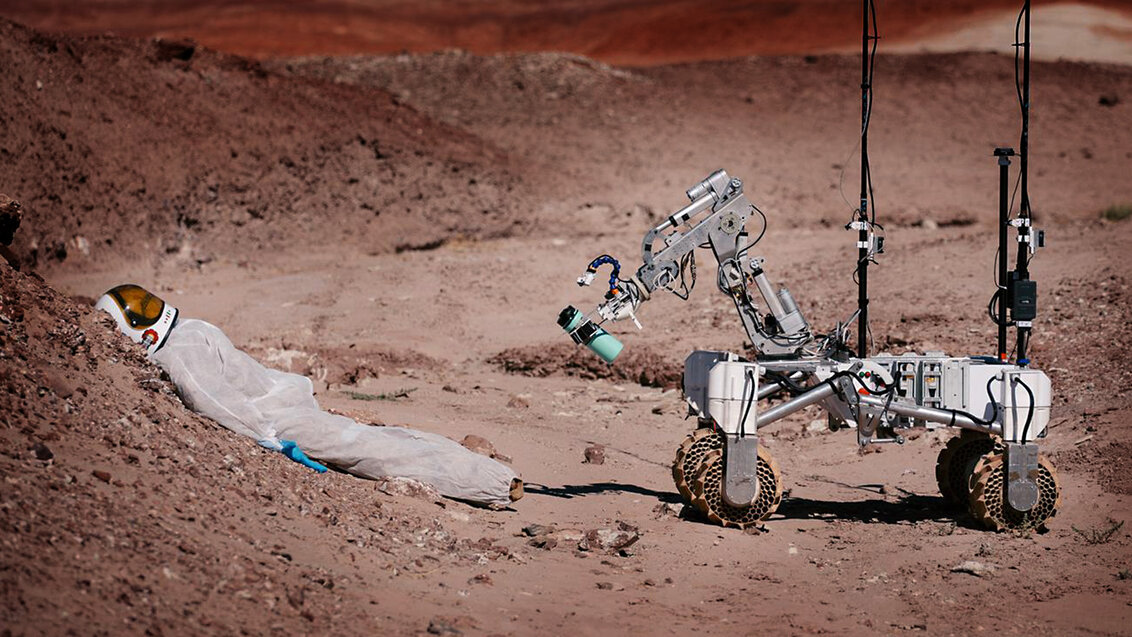

How much do people trust robots? Do we have unlimited confidence in their reliability? In what circumstances do we turn to machines? What are the features of successful human-robot cooperation? These were the questions asked by the AGH UST students who investigated the level of trust we give robots. In their study, they used Kalman – a Martian rover that they have been developing for 5 years.

As the focal point of their research, our students, Nina Bażela and Paweł Graczak, members of the AGH Space Systems Student Research Club, wanted to determine whether the other members of their team have a similar level of trust for the robot, or whether there are factors that influence the level of trust and differentiate it from member to member. Their investigation focused on two areas: the confidence of the team members put in the rover and the attempt to answer the question whether Kalman can be called a social robot.

The level of trust

The functionalities of the Martian rover are similar to those of robots that work in dangerous environments – searching for victims in catastrophes or performing tasks in war zones. Therefore, the level of confidence put in a machine should, by definition, be high enough to trust the robot with such responsible and difficult missions. In conditions like these, it is important that a team working with such robots has an appropriate level of trust in the machines. Should it be too low, instead of entrusting them with dangerous tasks, the team members would prefer to do them on their own, thereby putting themselves in direct danger. However, if it became too high, the team members would be eager to trust the robots with tasks too complex for them, which, in the event of failure, could pose an actual threat to the health and even life of someone.

To answer the question whether the AGH Space Systems team members have confidence in their robot and what factors influence the level of trust, the students observed the team and conducted a questionnaire survey. The survey and observations had been carried out prior to the team’s departure for the University Rover Challenge, for which the team spent weeks on intensive preparations.

As the results show, the level of trust for the rover was similar in all members of the team and was classified as high (on a scale from 1 to 5 it was close to 4). The AGH Space Systems team members are well aware of Kalman’s limits, but it did not negatively affect the level of confidence they put in their creation or the intensity of their emotional attachment to it. At the same time, the study revealed that awareness of the robot’s limits makes students even more prepared for malfunction or failure. Therefore, the robot’s restrictions are not a surprise for the team and do not result in a decrease in the level of confidence in the robot. A fact that is also corroborated by the observations of the team.

The only variable that correlated with the level of trust was the amount of sleep the previous night. It was a weak negative correlation, which means that the less time someone slept, the higher their level of trust in the rover.

‘This particular result is quite interesting because teams working with robots in search for victims in an area of natural disasters or in a war zone are usually prone to working under conditions of elevated stress and a reduced amount and quality of sleep. As the results of the study show, this can lead to an increased level of confidence in a robot and giving it too complex tasks or ignoring its limits. Therefore, we would like to expand on this aspect of our investigation in the future and check whether the same effect occurs in other teams as well’, ephasises Nina Bażela, the author of the research.

Kalman as a social robot

The other part of the investigation was based on theoretical assumptions and observation of the team’s work. Its aim was to find the answer to the question of whether Kalman can be described as a social robot. This term is usually reserved for robots capable of interacting with people and possessing special functionalities, for instance, eye-tracking, conversation skills, etc. Although Kalman is not capable of performing the aforementioned things, some definitions do count it among the group of such robots with regard to the social context in which it finds itself, as well as the emotional relationship that the team members have established with it. The multifarious social situations in which Kalman participates include: participation in fairs and conferences, during which the team members, using Kalman’s manipulator, hand the audience various objects; the robot’s birthday party, during which Kalman blew a candle and cut the cake; taking the robot for walks by the team members.

Observing the members of the team, the authors of this study concluded that despite the rover’s lack of humanoid appearance and the ability to converse or make eye contact, the research club members do feel an emotional connection with it. Three statements, with which the surveyed agreed, point to this conclusion: ‘Kalman is friendly’, ‘Kalman is kind’, ‘Kalman is a good team member’.

The authors of this investigation presented the results in Helsinki during a scientific conference titled Robophilosophy 2022 – Social Robots in Social Institutions, which focused on the use of robots in various institutions, such as healthcare, government facilities, and educational establishments.

Agreement on cooperation with OPAL-RT

Agreement on cooperation with OPAL-RT  Krakow DIANA Accelerator consortium members with an agreement

Krakow DIANA Accelerator consortium members with an agreement  Meeting with the Consul General of Germany

Meeting with the Consul General of Germany  More Academic Sports Championships finals with medals for our students

More Academic Sports Championships finals with medals for our students  New multi-technology 3D printing laboratory

New multi-technology 3D printing laboratory  Bronze for our swimmers at Academic Championships

Bronze for our swimmers at Academic Championships  Smart mountains. AGH University scholar develops an intelligent mountain rescue aid system

Smart mountains. AGH University scholar develops an intelligent mountain rescue aid system